Here is a list of statistical formulas for application in plant breeding programmes.

1. Statistics:

It is a broad discipline concerned with methods for collecting, organising, summarizing, presenting, and analysing data, as well as making valid conclusions about the characteristics of the sources from which the data were obtained. It is basically, involved with data analysis.

2. Parameters:

Population characteristics such as mean, variance and standard deviation etc. are known as parameters.

3. Statistics:

Statistics are characteristics of samples. Singular form is statistic.

4. Population:

The aggregate of individual values or the individual themselves, from which, these are obtained, is known as population.

5. Sample:

The selection of a part of the population in a random manner to represent the whole is known as sampling. The part selected, is known as the sample.

6. Simple Random Sample:

A sample is called a simple random sample if it is obtained in a way that makes every possible sample with the same number of observations equally likely to be selected.

7. Sampling Error:

This term refers to the natural variation from one sample to another. It does not imply a mistake or error on the part of experimenter or anyone.

8. Sample Mean:

Sample mean (X̅) is a statistic that measures the center of a sample.

X̅ = ΣX/n (n = number of observations)

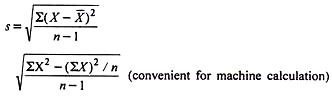

9. Sample Standard Deviation (s):

It is a statistic, that measures the amount of variability within a sample. It measures as to how far each sample observation is from the mean of sample. Thus, in calculation, X- X̅ deviations are determined. These values are squared to make all of them positive.

The sum of squared values is divided by n-1 to find the sample variance (n = number of observations). The divisor n – 1 is used rather than n as division by n gives a biased estimate of variance and division by n-1 eliminates this bias.

The square root of the sample variance is the sample standard deviation, commonly denoted by s. Usage of square root allows the sample standard deviation to be expressed in the same units as the original observations.

The counterpart of s for a population is sigma, σ (a parameter).

10. Variance:

This is the square of standard deviation and is denoted by s2 or σ 2 depending upon whether it refers to sample or population.

11. Standard Error:

Standard deviation of sample mean (s X̅) is known as standard error. The square of the standard error is called the sampling variance.

s X̅ = s/√n

12. Coefficient of Variability (CV):

Standard deviation expressed as percentage of mean is known as coefficient of variability.

CV = (s x 100)/ X̅

This is helpful in comparing the variability of items with different mean or measurements. For example, variability in heights of okra and that of French-bean may be compared.

Application of Standard Deviation:

X̅ ± s covers about 68% of the observations while X̅ ± 2 s covers 95% of the observations. In the given example

X̅ + s = 5 + 2.79 = 7.79

X̅ – s = 5 – 2.79 = 2.21

This means that for 10 observations in the given numerical, about 2/3, i.e. 10 x 2/3 = 6.67 observations can be anticipated to be between 2.21. and 7.79. Standard error (s X̅) calculated as 0.88 indicates that if more samples of 10 observations are drawn from the same population, their mean values may differ from the 1st sample mean of 5 but about 2/3 of the samples of 10 items will have a mean in the range of 5 ± 0.88 and 95% (19/20) of the samples will have mean values in the range of X̅±2 standard error, i.e. 5 ± 1.76.

Unpaired t Test:

When a set of data is available on 2 varieties/ treatments without any relationship between a pair of observations, t test is applied to test whether the difference between 2 treatment means is significant.

If there are 10 observations/varieties, the degrees of freedom for t are 9 + 9, i.e. 18. Calculated t value is compared with the table value and if the calculated t value at 0.05 probability is more than the table t value, the difference between 2 means is significant.

13. The Paired t Test:

This test is used to analyse pairs of observations. It is more sensitive than the unpaired test, where comparisons are made over a range of environments. Its use by plant breeders is limited as it allows comparisons of only 2 varieties.

In this test data is arranged in pairs, for each pair difference (d) is determined. Using earlier formulae, sd and s d̅ are calculated, t is calculated in usual manner (d̅/s d̅) at a number of pairs- 1 degrees of freedom. A numerical example assuming independent samples and paired samples taken from Diwakar and Oswalt (1992) is as follows:

‘t’ Test of Significance:

Let us assume yield data on 2 cultivars (A and B) recorded from 10 locations as given in the following table and apply t test assuming independent samples and paired samples. The data and calculation is based on Diwakar and Oswalt (1992). Required clarifications have been added to follow the calculations easily.

14. Correlation:

The word correlation is used as a measure of strength of a linear relationship between 2 variables. This is called as Pearson’s product moment correlation coefficient or simply the sample correlation coefficient. This statistic is denoted by r, and is close to zero, when there is no tendency for one variable to increase or decrease as the other variable increases.

It is always between -1 and +1. It equals +1 only, if all points in the scatterplot of the 2 variables are on a straight line with an upward slope. It equals -1 only if all the points are on a straight line with a downward slope.

In view of this, numerator of formula of correlation should also contain n-l as divisor and the denominator should contain ![]() as divisor. However, they cancel each other,

as divisor. However, they cancel each other,

hence are omitted.

![]() at n – 2 degrees of freedom. The equivalent of sample correlation r in case of population is population correlation coefficient designated by Greek letter rho (p).

at n – 2 degrees of freedom. The equivalent of sample correlation r in case of population is population correlation coefficient designated by Greek letter rho (p).

15. Regression:

The correlation coefficient measures the strength of the linear relationship between 2 random variables. On the other hand the amount of change in dependent variable (y) for each unit change in independent variable (x) is known as regression coefficient.

This is a mathematical relationship between 2 variables and is given by:

where, y is the unknown dependent variable, x is a known value of the independent variable, and b is the regression coefficient of y on x. If b is known, (y̅ – b x̅) becomes a constant, expressed as a, then the formula becomes as

16. Coefficient of Determination:

The error sum of squares, error (y – ŷ)2 represents the sum of the squared vertical distances of each sample point in the scatter plot from the least squares regression line. Thus, it represents the variation in y that is unaccounted for after regression on x. The equation total ss = (y – ŷ)2, represents the total variation in y that is, the sum of squares of the sample observations from the sample mean.

The ratio of the unaccounted for variation to the total variation represents the proportion of variation in y that is unaccounted for by regression on x. Subtraction of this proportion from 1 gives the proportion of variation in y that is accounted for by regression on x. The statistic used to express this proportion is called the coefficient of determination, denoted by R2.

R2 = 1- Variation in y remaining after regression on x/Total variation in y

The easiest way to find the coefficient of determination in linear regression is to find r2.